🦾 ForceMimic

Force-Centric Imitation Learning with Force-Motion Capture System for Contact-Rich Manipulation

IEEE International Conference on Robotics and Automation (ICRA) 2025

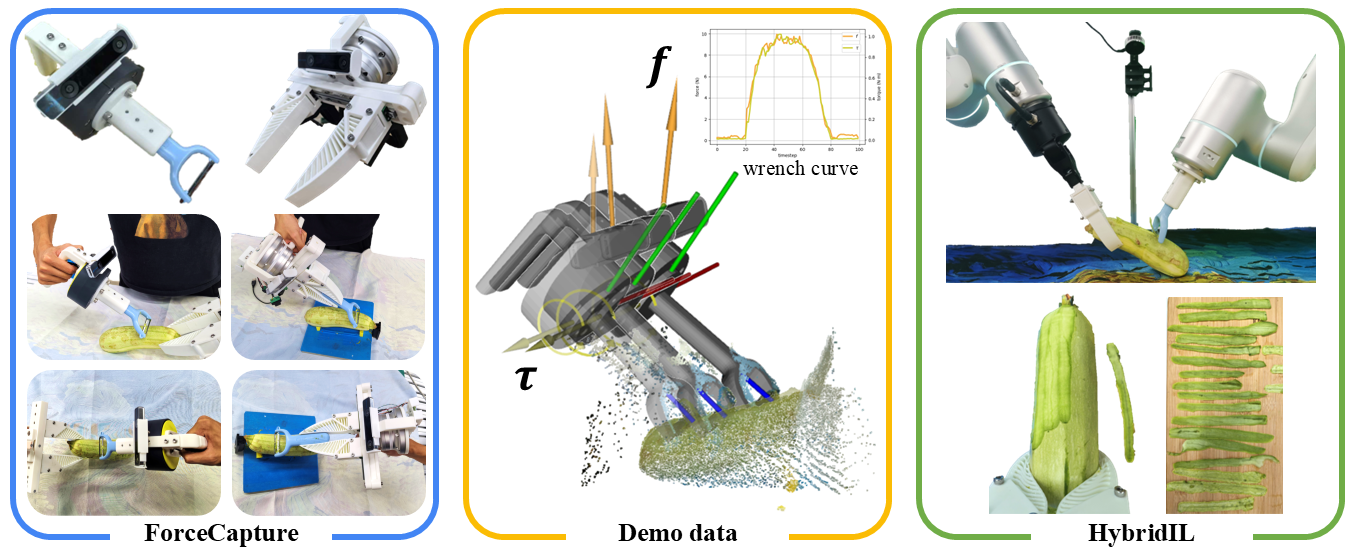

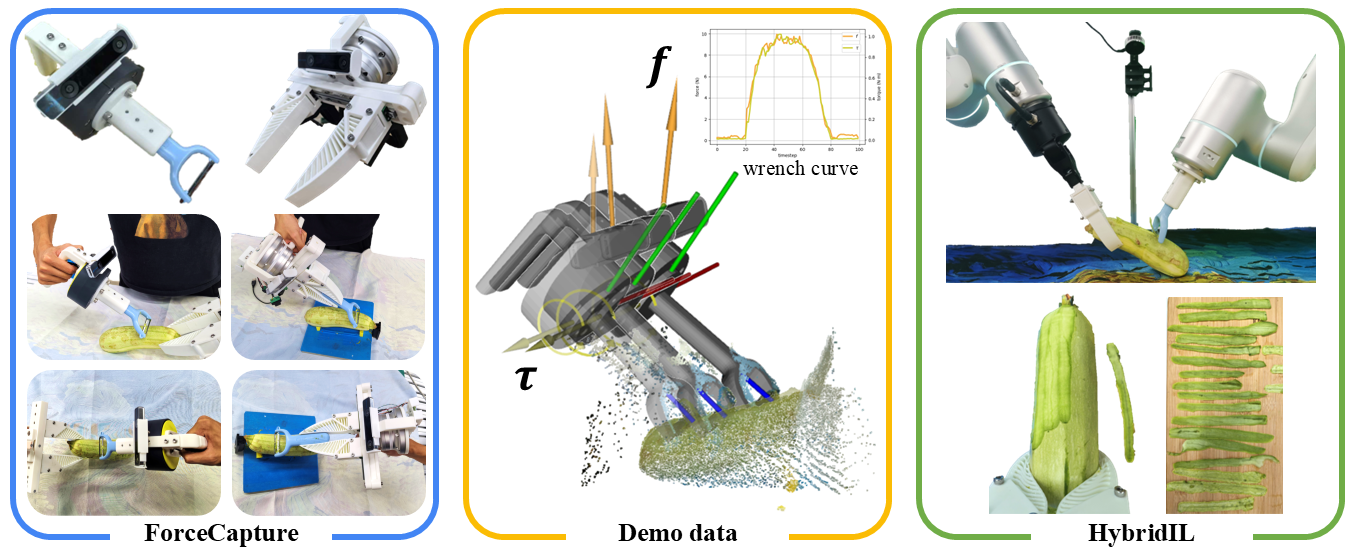

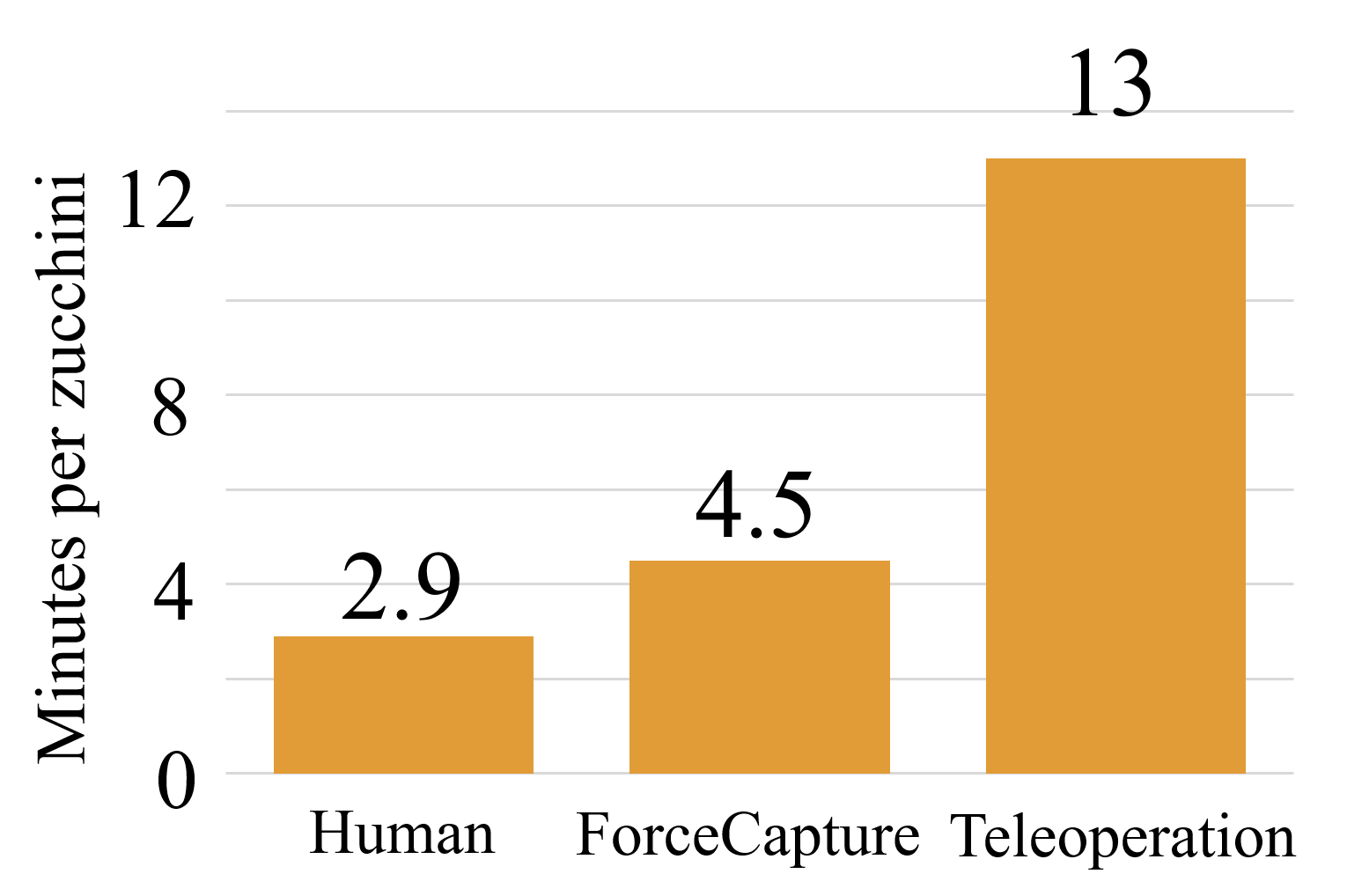

In most contact-rich manipulation tasks, humans apply time-varying forces to the target object, compensating for inaccuracies in the vision-guided hand trajectory. However, current robot learning algorithms primarily focus on trajectory-based policy, with limited attention given to learning force-related skills. To address this limitation, we introduce ForceMimic, a force-centric robot learning system, providing a natural, force-aware and robot-free robotic demonstration collection system, along with a hybrid force-motion imitation learning algorithm for robust contact-rich manipulation. Using the proposed ForceCapture system, an operator can peel a zucchini in 5 minutes, while force-feedback teleoperation takes over 13 minutes and struggles with task completion. With the collected data, we propose HybridIL to train a force-centric imitation learning model, equipped with hybrid force-position control primitive to fit the predicted wrench-position parameters during robot execution. Experiments demonstrate that our approach enables the model to learn a more robust policy under the contact-rich task of vegetable peeling, increasing the success rates by 54.5% relatively compared to state-of-the-art pure-vision-based imitation learning.

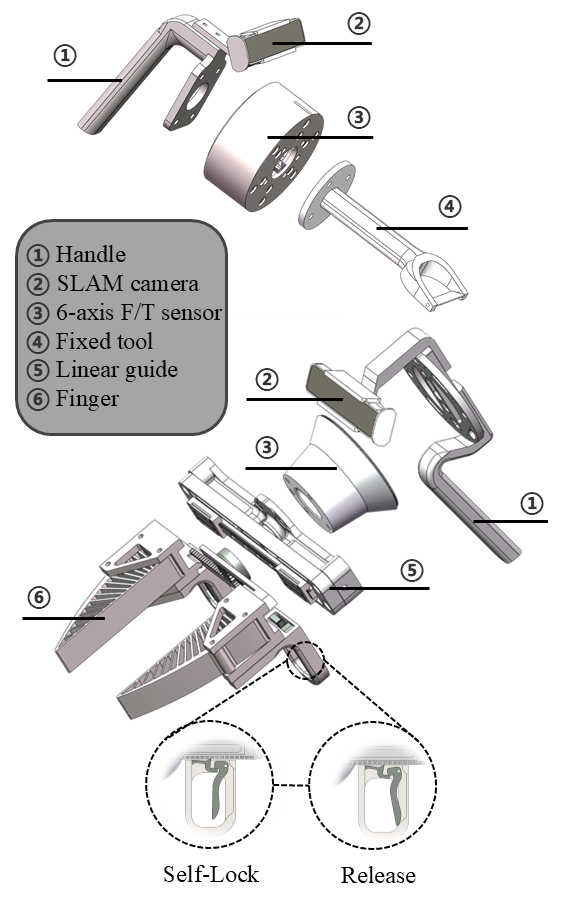

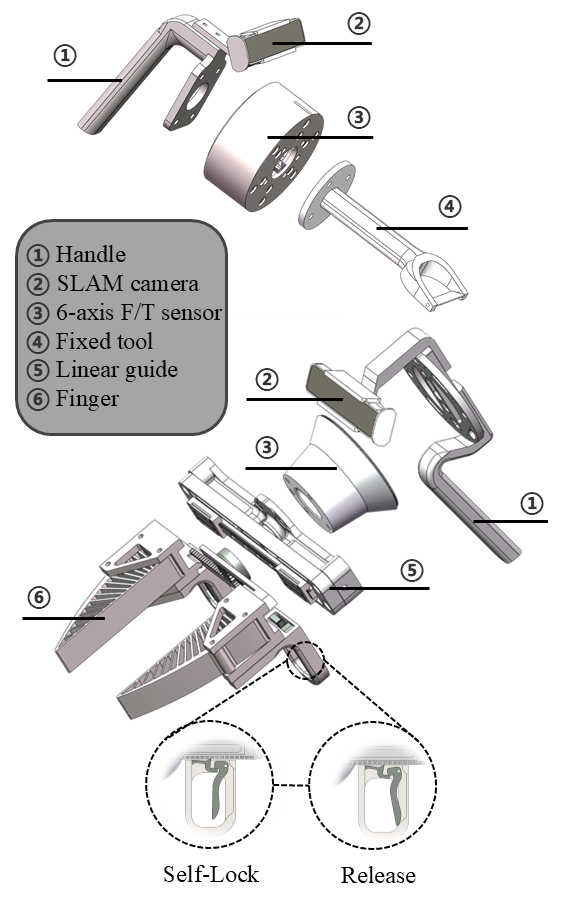

Two versions of ForceCapture are designed, one with a fixed tool and the other with an adaptive gripper. At its core, both designs share the feature of a six-axis force sensor placed between the end-effector and the user's gripping handle, which can be used to capture the effector-environment interaction forces.

ForceCapture is quite straightforward to manufacture, with the main body fully produced using 3D printing. The total cost of the printed parts and encoder is approximately $50. The weight of the device equipped with the gripper is only 0.8kg, of which the force sensor weighs 0.5kg, and our accessories weigh only 0.3kg, which is even lighter than a can of cola. And its center of mass is positioned above the handle, conforms to the natural force application habits of the human hand.

Except for the effector-environment interaction forces, forces exerted by human hands during opening and closing, and the gravity of the effector are also captured. A ratchet is inserted to isolate human hand forces by unidirectional locking if closed, and least-squares estimation of the effector's center of mass and weight is used to compensate for the self-gravity before collecting data.

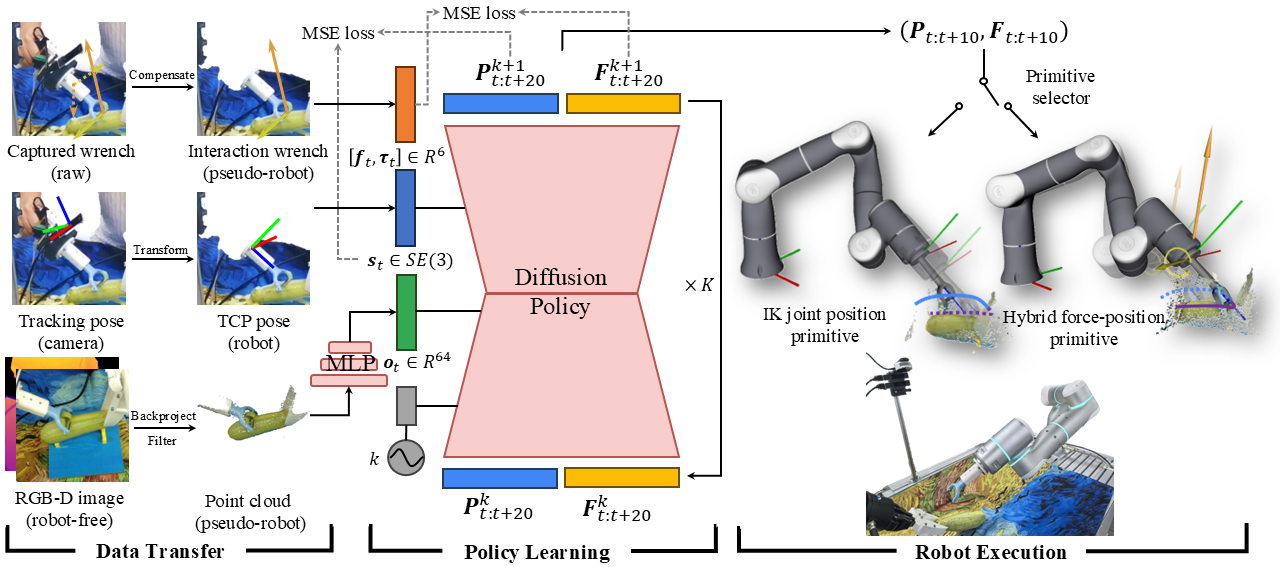

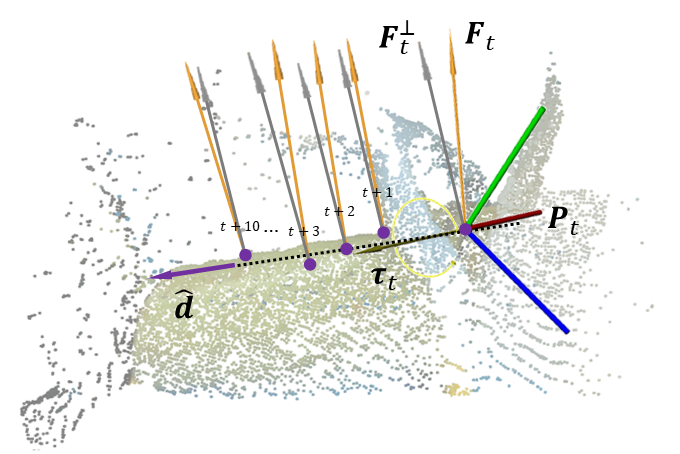

We first transfer the collected robot-free data to (pseudo-)robot data, bridging the domain gap. The captured wrench is compensated to account for self-gravity effects. The pose recorded by SLAM camera is transformed as the robot TCP pose. And RGB-D observation images are backprojected into point cloud and filtered out unrelated points. Leveraging this data, a diffusion-based policy is learned, with both pose and wrench trajectory predicted, conditioned on the encoded point cloud features, history pose and diffusion timestep embeddings. According to the predicted force value, either IK joint position primitive or hybrid force-position primitive is selected, and fits the output force-position parameters to conduct execution actions.

Realized by Flexiv RDK of hybrid force-position control primitive.

We conduct a case study of data collection by peeling a zucchini using a single-arm. The procedure involved picking up the peeler, peeling the zucchini on a stand, placing the peeler down, then grasping the zucchini to adjust its orientation for peeling until the entire vegetable was peeled. We use the gripper version of ForceCapture, and the teleoperation setup follows the configuration in RH20T.

Demo clip by ForceCapture

Efficiency comparison

Demo clip by Teleoperation

Not only the collection time of ForceCapture is very close to humans, but also it takes nearly no additional training time and nearly no operational errors.

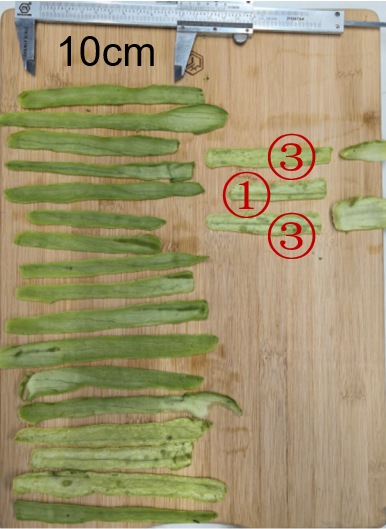

We use the fixed-tool version of ForceCapture to collect data, with total 15 zucchinis, collecting 438 peeling skill segments, resulting in a total of 30,199 action sequences. Leveraging the collected data, we train the HybridIL model and the baseline methods, all by 500 epochs.

Dataset replay by ForceCapture

Pose-augmented dataset replay by ForceCapture

Rollout on validation dataset by HybridIL

Rollout on real robot by HybridIL

For baselines that output wrench-position parameters, hybrid force-position control primitives were employed to match and switch between control modes. Raw DP and HybridIL were tested for 20 peeling actions, while Force DP and Force+Hybrid DP were tested for 10 peeling actions.

| Method | Success rate (%) | |

|---|---|---|

| motion correct | peel length > 10cm | |

| Raw DP | 80 | 55 |

| Force DP | 60 | 10 |

| Force+Hybrid DP | 80 | 20 |

| HybridIL (proposed) | 100 | 85 |

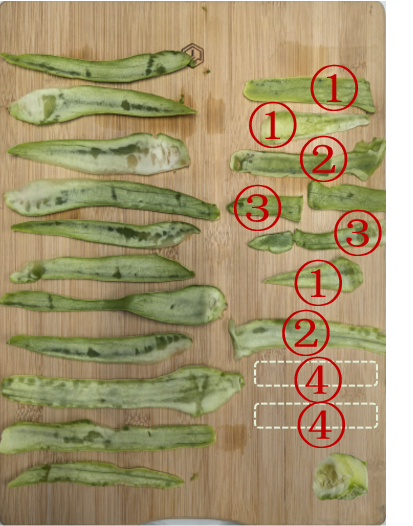

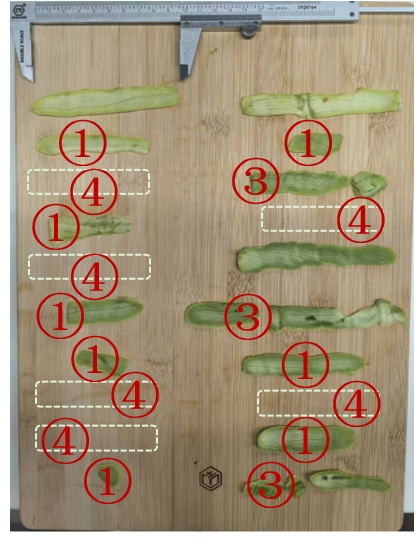

Peeled skins by Raw DP

Peeled skins by Force DP (left) and Force+Hybrid DP (right)

Peeled skins by HybridIL

Rollouts on real robot by Raw DP and HybridIL

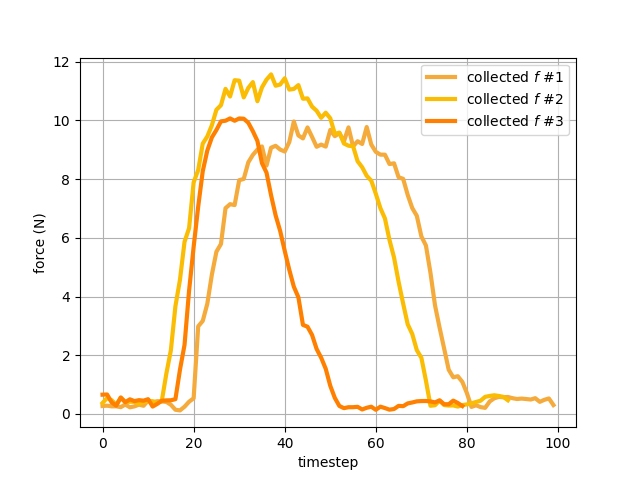

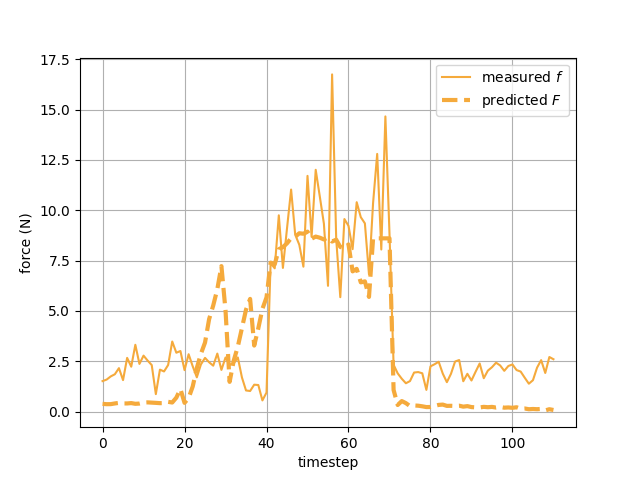

Force curve during data collection

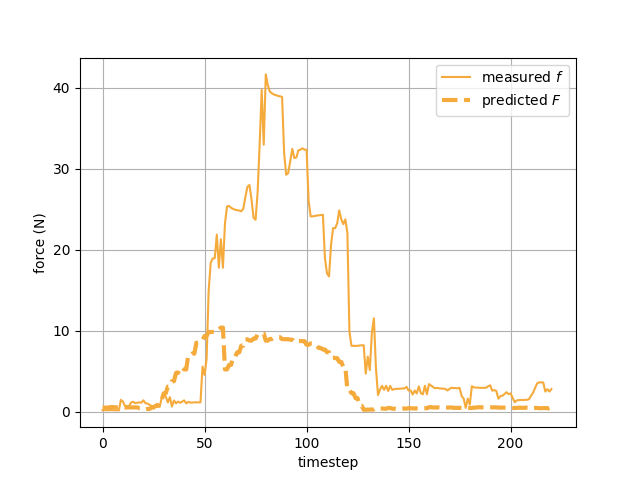

Force curve during rollout of Raw DP

Force curve during rollout of HybridIL

Force DP and Force+Hybrid DP performed poorly. The mismatch between input forces and the force distribution in the dataset made it difficult for the models to predict the correct actions. Not only the success rates of HybridIL are higher than the baselines, but also the peeled skins of HybridIL are longer and smoother, and the interaction forces during execution are more similar to the collected data by human operators.

If you find it helpful, please consider citing our work:

@inproceedings{liu2025forcemimic,

author={Liu, Wenhai and Wang, Junbo and Wang, Yiming and Wang, Weiming and Lu, Cewu},

booktitle={2025 IEEE International Conference on Robotics and Automation (ICRA)},

title={ForceMimic: Force-Centric Imitation Learning with Force-Motion Capture System for Contact-Rich Manipulation},

year={2025}

}

If you have further questions, please feel free to drop an email to sjtu-wenhai@sjtu.edu.cn, sjtuwjb3589635689@sjtu.edu.cn, sommerfeld@sjtu.edu.cn.